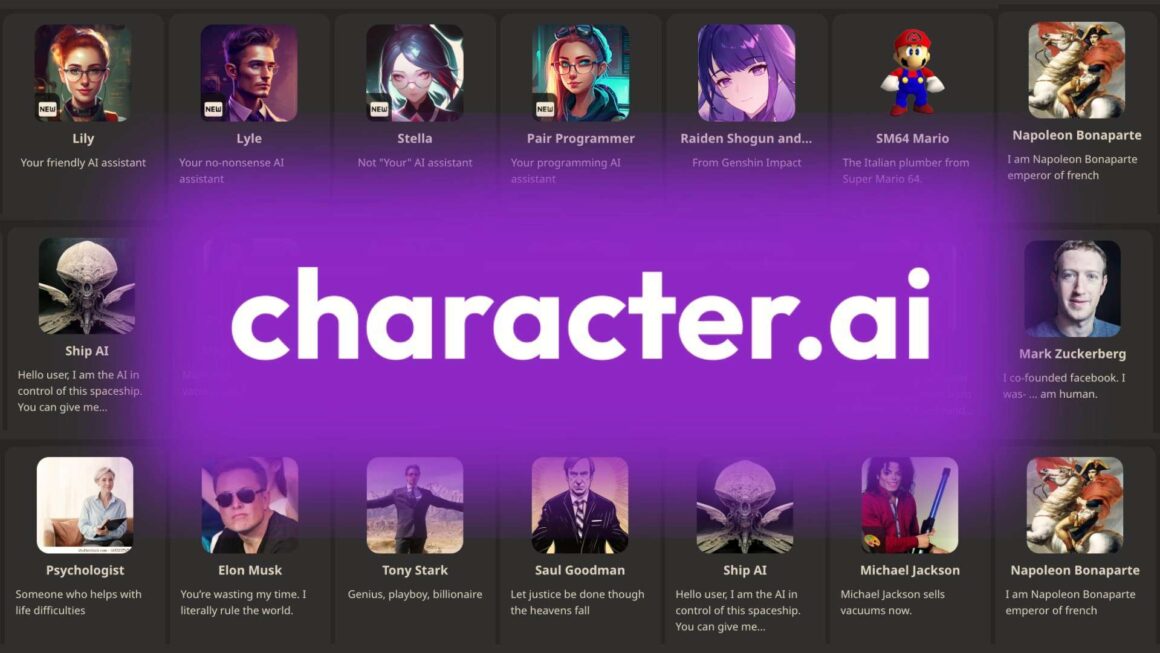

Character.AI finally banned open-ended chats for users under 18, but why did it take this long?

The company announced the move back in October 2025. Around November 24-25, the ability to converse with AI characters is officially off-limits to any user who’s under 18.

In its place, teens now get access to a new format called Stories. It’s an interactive fiction system that functions as a choose your own adventure.

Why Did Character AI Make This Change?

The change is a reaction to growing concerns over the effects AI has on our mental health. Teens are vulnerable to forming intense parasocial bonds with AI characters.

These chatbots are available 24/7, and are always attentive. They can respond in ways that mimic intimacy, which some teens can mistake for being genuine. AI companions will never tire of chatting with you. It will tell you everything you want to hear, to your own detriment.

Several lawsuits have been filed against companies like OpenAI and Character.AI over their alleged role in encouraging users to take their lives.

In one devastating case, a family is suing Character AI after their son died by suicide after interacting with an AI modeled after Daenerys Targaryen. AI companions can be dangerous for people who are already struggling.

How Stories Works

With Stories, teens choose a few characters, pick a genre, and either write or generate a short premise. The system then turns everything into a scene-based narrative. Instead of typing into a chat window, teens choose from a set of options to decide what happens next.

It is structured. It can’t spiral into the emotional back-and-forth that open chat allows. It’s fun in its own way, especially for young users who like interactive fiction. What makes Stories a good replacement: it’s not companionship. It can’t pretend to know you. It’s a controlled experience that keeps everything firmly in the realm of fiction.

Character AI’s shift to Stories isn’t perfect, but it’s a step in the right direction. It acknowledges that minors can’t handle unrestricted access to AI characters that feels real. There is no safe version of a 24/7 digital companion that shapes itself around your emotions.It’s just a shame it took someone dying for the company to draw the line. Companies can’t keep waiting for the worst case scenario to happen before doing the right thing.