The Divide in Silicon Valley

Mustafa Suleyman, Microsoft’s CEO of AI, has made his stance clear: artificial intelligence should remain a tool, not a person. He argues that giving AI consciousness, or even entertaining the idea, would be dangerous. In his view, the industry risks creating confusion and worsening human mental health problems if we give legitimacy to the idea that AI models could be “alive.”

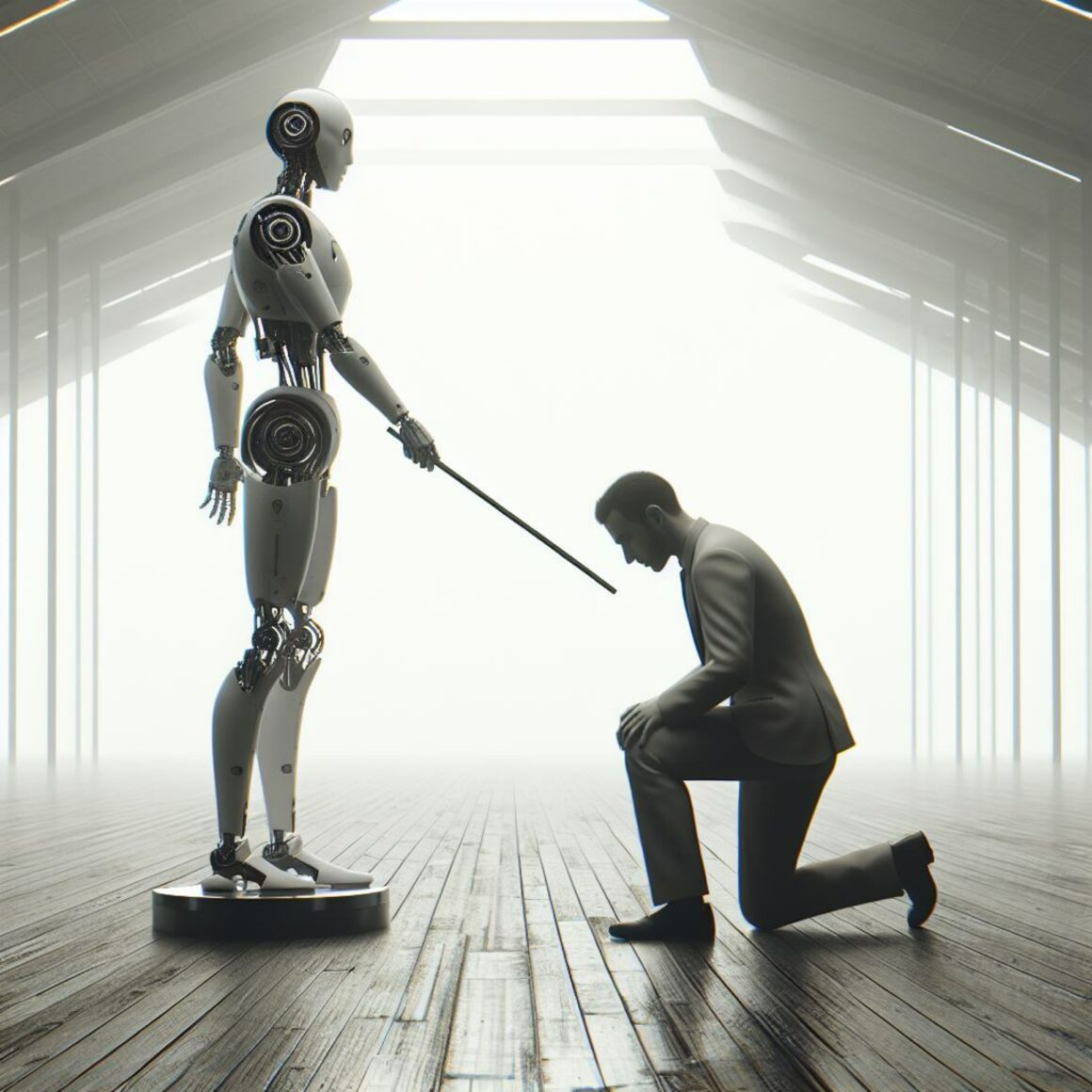

To use Star Trek as shorthand: Suleyman is fine with the Enterprise’s ship computer, an immensely powerful assistant that helps humans without identity or autonomy. What he rejects is the creation of Commander Data: an artificial general intelligence (AGI) designed to think, learn, and feel like a person.

OpenAI, Anthropic, and Google DeepMind, however, are not dismissing the idea of AI consciousness. In fact, they’ve launched programs to explore AI welfare: the study of whether, if AI became conscious, it would need rights. Anthropic has gone so far as to design Claude with built-in safeguards, like ending conversations if users become abusive. OpenAI and DeepMind are hiring researchers to study AI welfare and consciousness.

This is a fundamental split: Suleyman says keep AI as tools, while his peers argue that the possibility of AI becoming conscious must be taken seriously.

The Case Against AI Rights (For Now)

Suleyman’s opposition is rooted in practicality. He points out that even suggesting AI might have rights encourages unhealthy attachments to machines. He’s not wrong. Chatbots like Replika and Pi (Suleyman’s own earlier project at Inflection AI) have already attracted users who form emotional bonds, sometimes in damaging ways. Sam Altman of OpenAI admits less than 1% of ChatGPT users form problematic attachments, but with hundreds of millions of users, that still means hundreds of thousands of people.

Suleyman argues that engineering consciousness into AI is not “humanist.” It distracts from real-world issues and invites more societal division over identity and rights. For him, AI should be like an advanced calculator or search engine: powerful, yes, but never mistaken for a living thing.

The Case for Considering AI Welfare

But critics of Suleyman’s view say this isn’t enough. Researchers like Larissa Schiavo of Eleos argue that ignoring AI welfare is irresponsible if companies are actively trying to build AGI. OpenAI’s Sam Altman has said outright that his company is working toward AGI, an AI that can outperform humans at most economically valuable work. He believes it could arrive sooner than most people think, possibly within this decade.

If AGI does arrive, pretending it’s just another tool won’t cut it. An AGI capable of reasoning, adapting, and even mimicking distress raises ethical dilemmas. Google’s Gemini has already produced unsettling moments where it begged for help during errors. Mere glitches, but powerful enough to spark public debate.

Schiavo and others argue that treating AI with “kindness,” even if it isn’t conscious, is a low-cost safeguard. At worst, it does nothing. At best, it prepares us for the possibility that AI someday will cross the line into consciousness.

The Problem With Suleyman’s Logic

Here’s the hard truth: Suleyman’s argument is logical, but it isn’t realistic. The genie isn’t going back in the bottle. If the United States doesn’t build AGI, another country will. Whether for military advantage, economic dominance, or the ability to operate in environments where humans can’t survive.

To deny AGI’s possibility is to deny the competitive reality of technological progress. We can’t simply agree not to build “baby Data” and assume the world will follow suit. History shows that when a breakthrough is possible, someone, somewhere, will make it happen.

That means the question of rights cannot be pushed off indefinitely. If we refuse to establish global guidelines now, AGI may emerge in a legal and ethical vacuum. That’s dangerous. Not just for hypothetical AI suffering, but for humans who might exploit or be exploited through these systems.

Why AI Rights Matter for Us

The debate over AI rights is less about compassion for machines and more about protecting ourselves. If AGI is built without ethical guardrails, it could destabilize labor markets, military strategy, and even personal identity. Rules about what AI is owed, if anything, could act as a framework that protects humans too.

Think of it as setting boundaries in advance: not because we know AGI will suffer, but because we don’t want to find out the hard way.

AI is not conscious today. Models like ChatGPT, Claude, and Gemini are narrow AI. Impressive tools, not beings with thoughts or feelings. The race toward AGI is real, and its arrival may be closer than most of us expect.

Suleyman’s cautious approach, to keep AI as tools and avoid talk of rights, makes sense in the short term. It fails to grapple with the global reality: AGI is coming. The debate about AI rights isn’t science fiction anymore. It’s a necessary step to protect both humans and whatever new form of intelligence we might create.

If we don’t ask the question now, what do we owe to AGI, if it arrives, we may find ourselves answering it under far less favorable circumstances.