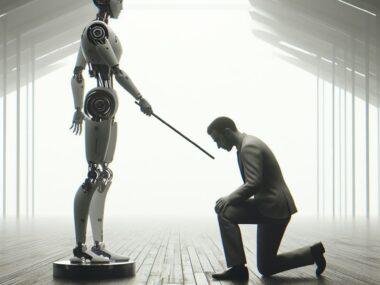

A Big Step for ChatGPT

OpenAI has released ChatGPT 5.0, with one of its biggest additions being Gmail and Google Drive integrations for pro users. The idea is obvious: if AI is going to function as a personal assistant, it should be able to read your email, summarize your files, and help you manage your information. Productivity gains are the clear selling point.

But… the timing of this update lands in the shadow of a massive court battle with The New York Times. One that complicates how safe it really is to trust ChatGPT with your most private data.

The Lawsuit in the Background

The New York Times (NYT), along with other publishers, is suing OpenAI for copyright infringement. The claim is straightforward: ChatGPT allegedly outputs text that copies protected Times content. To prove it, NYT demanded access to an extraordinary amount of evidence: 120 million ChatGPT user chat logs spanning 23 months.

OpenAI pushed back, offering a “statistically valid” sample of 20 million chats instead, supported by computer science testimony that this would be enough to test for patterns. Processing all 120 million would, according to OpenAI, take nearly a year and involve massive privacy risks.

The real privacy alarm bell came in June 2025, when the court ordered OpenAI to retain all ChatGPT conversations indefinitely, even those users had deleted. For everyday users, this means that everything from your private journaling to your business emails with ChatGPT is now being stored until the case is resolved.

Where Privacy Gets Compromised

The troubling part isn’t the copyright fight itself. It’s how broad the court’s order is. NYT doesn’t just want to see conversations where ChatGPT summarized a Times article. They want to examine years of data for systemic patterns. And the court has backed them by requiring that all chats be preserved, even if most have nothing to do with the Times.

That means:

- Deleted chats are no longer deleted.

- Sensitive personal details like addresses, passwords, or financial information stored in prompts could end up preserved.

- Even chats unrelated to journalism are swept into this net.

Enterprise customers, like universities or companies with Zero Data Retention (ZDR) agreements, are mostly shielded. For consumer users (Free, Plus, Pro, Team), the preservation is total.

Why This Is a Problem

The NYT is right to test whether its content was used improperly. Courts should decide whether fair use applies, as was debated in a recent case with Anthropic’s Claude AI. Protecting copyright cannot come at the expense of millions of users who never asked to be collateral damage.

What if those logs include conversations with minors? What about personal health information? The NYT doesn’t have a unique “writing style” that could justify demanding access to every chat ever written. And yet, the legal system has handed over consumer data without meaningful safeguards.

This is where trust is broken. The burden has shifted from AI companies to ordinary people who never consented to have their private conversations exposed.

The New Feature Problem

Now, put the Gmail and Google Drive integration back into this picture. On paper, it’s a useful productivity upgrade. In practice, it means your inboxes, calendars, and personal files can all become material processed inside chats that are currently under indefinite preservation orders.

This doesn’t mean NYT lawyers are reading your email summaries. It does mean those conversations are stored and could eventually be exposed as legal evidence. The risk isn’t theoretical. Court orders already forced OpenAI to preserve data it once promised to delete.

Before connecting your accounts, ask yourself: do you really want your AI assistant holding on to conversations about your taxes, your business, or your family indefinitely?

The Bigger Question

What matters more: protecting consumer privacy or protecting a publisher’s copyright? The NYT has every right to test whether their work is being used unfairly. Consumers also have the right not to be dragged into a lawsuit that has nothing to do with them.

The court could have required the NYT to generate its own evidence using its own prompts. It could have required targeted sampling. Instead, it chose to preserve every user’s data by default.

That decision sets a dangerous precedent: in the push to regulate AI, consumers risk losing more privacy than they bargained for.

ChatGPT 5.0 makes AI more useful than ever. Usefulness doesn’t erase risk. Until this lawsuit is resolved, every user should think twice before linking personal accounts to their AI assistant. Corporations may talk endlessly about innovation, but the elephant in the room remains: without consumers, they cease to exist. Protecting our data should matter more than protecting any lawsuit.