OpenAI is stepping into the social video arena with its new app, Sora. On the surface, it looks like their answer to TikTok. A short-form video platform where users can create and share clips up to 10 seconds long.

Instead of regular videos, Sora runs on OpenAI’s own advanced video-and-audio generation model, Sora 2. They can whip up eerily realistic videos complete with synced audio and physics simulation.

There’s even a feature called Cameos, where you film a short one-time video and audio clip for identity verification. From there, you can insert yourself or friends into videos, remix existing clips, or scroll a feed that looks a lot like TikTok’s “For You” page. It’s invite-only for now, available to iOS users in the U.S. and Canada, with Android and other regions coming later.

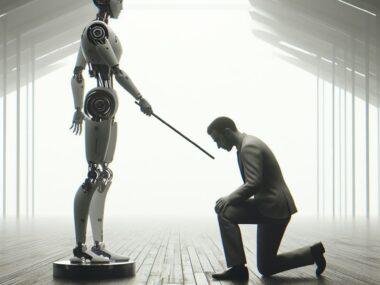

The Harmless-Looking Danger

At first glance, the app feels almost too playful to worry about. TechCrunch pointed out that users are already pumping out Pikachu deepfakes mocking Nintendo’s lawsuit-happy strategy. That kind of content looks harmless, which is what makes it so dangerous.

If you can put Pikachu in a video, you can also put politicians, CEOs, or celebrities in one. Anyone with enough personal data online is fair game. OpenAI insists users have control over who can use their likeness. It doesn’t take much imagination to see how easily those systems could be bypassed.

The Biometric Question Nobody Should Ignore

Sora requires you to hand over biometric data to create a Cameo. Essentially giving OpenAI your face, voice, and mannerisms. Officially, the company says this data is only for verification and won’t be used to train models. Given how valuable biometric datasets are, it would be naive not to question whether that firewall will hold.

Even if OpenAI itself plays fair, Sora is normalizing a new era of hyper-convincing deepfakes. Once the tech spreads wider, the potential for misinformation, bullying, or harassment skyrockets.

Guardrails or Just Window Dressing?

OpenAI touts several safety measures. Parental controls, user permission requirements for Cameos, and the ability to revoke or delete videos. That all sounds good on paper. If history has taught us anything, it’s that digital guardrails are only as strong as the people trying to break them.

Already, videos featuring OpenAI CEO Sam Altman are flooding the app, some are funny while others are unsettling. That surreal blend of entertainment and unease will only get stronger as Sora expands.

What is Sora really? A clever way to remix digital identities for fun? Or the beginning of a deepfake ecosystem that makes reality itself negotiable? The app is still in its invite-only phase, but the writing’s on the wall. OpenAI is barreling into social media territory where the stakes are cultural, political, and personal.