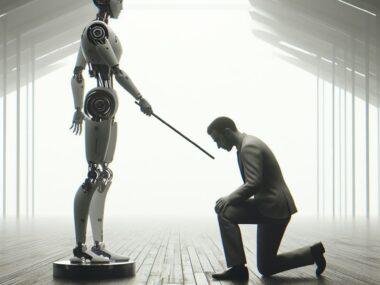

Online harassment isn’t anything new, but what’s happening now is different. Recently, victims are experiencing a chilling new form of digital abuse. AI-generated death threats that use synthetic images, video, and even audio to make the threat look disturbingly real.

Some people have received AI-created videos that show them being attacked, or photos depicting their deaths. The effect is psychological warfare. Victims have felt violated because of how realistic the threats are.

The Danger of Open-Source Models

While a lot of attention has been given to OpenAI’s Sora or xAI’s Grok, nobody really talks about local and open-source large language models (LLMs).

Local LLMs like Meta’s Llama or Google’s Gemma can be downloaded onto personal computers. Once they’re installed, the model is practically clay in the user’s hands. With the right skills, anyone can modify or “jailbreak” these systems to bypass filters and safety guardrails. Jailbroken LLMs can be used to generate violent images, deepfake videos or manipulate audio to harass people online.

The Legal System Isn’t Ready for AI-Generated Threats

The legal system wasn’t made for dealing with AI-generated threats. There’s no clear jurisdiction. No method to regulate local LLMs without invading someone’s privacy.

The system is designed to deal with traditional crimes. Law enforcement have a hard time tracing the source of the threat so they can respond accordingly.

Even forensic experts struggle to prove whether an AI-generated threat is fake or real. Social media companies are also slow with monitoring these types of threats on their platforms.

The rise of AI-generated threats exposes a simple truth: we are not ready for this kind of technology.

The Psychological Toll of Synthetic Violence

What makes AI-generated death threats harmful is their precision. These threats are customized: they sound like the victim, look like them. AI has gotten so advanced that a profile picture showing your face is more than enough to replicate your likeness. A five second clip of you speaking can be enough to train a model to your voice.

Victims often describe the experience as both surreal and terrifying. The mind knows it’s fake, but the body still reacts as if it’s in danger. That’s what these AI threats exploit. The instinctive human response to danger. It’s a traumatizing experience for anyone who’s targeted this way.

The technology that powers AI-generated threats is evolving faster than our ability to contain it. Right now, the law, the companies working on these AI models, or the public isn’t prepared for what’s coming next.