The central question here is simple. Are people actually thinking for themselves about AI, or are they reacting to a fear campaign they never stopped to question?

From where I am sitting, the entire conversation feels backward. The loudest opinions call AI corrupt, soulless, and harmful, yet millions of people use it every single day. The contradiction is right there in the open. People claim to hate it, but they wake up and immediately rely on it for work, school, or creativity. It does not make sense unless something deeper is happening underneath.

That is exactly the problem. We are not talking honestly about AI. We are talking about the version people were told to fear.

The Public Conversation About AI Is Built on Fear, Not Reality

Tim Sweeney is right about one thing. AI is going to be everywhere. Not in the scary, sci fi way people like to imagine, but in the boring, practical way technology always evolves. At some point, disclosure labels will be everywhere. They will be so common that people will stop noticing them. Steam’s mandatory AI label is just another early attempt at managing panic, not a long term solution.

The panic is what concerns me.

People have gotten comfortable repeating the same negative talking points. AI is killing creativity. AI is stealing jobs. AI is slop. AI is hollow. AI is cheating. None of these statements hold up when you look at how the technology is actually being used.

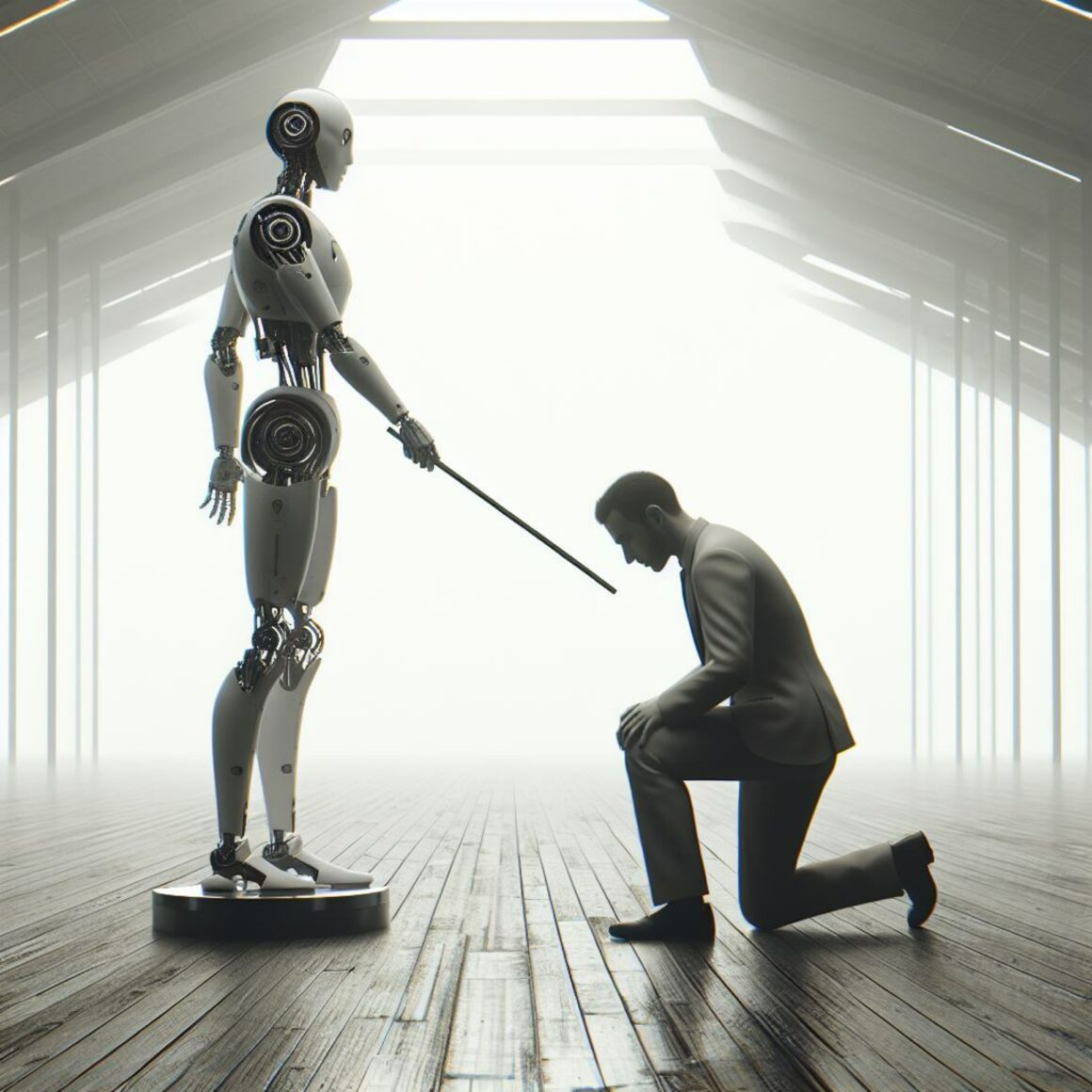

Right now, AI cannot function without a human guiding it. It does not wake up and decide to replace workers. It does not create coherent work without direction. It cannot even decide what “good” means. A human is still the one shaping the final outcome. A human is still the author of the ideas. A human is still the person spending hours building the final product.

That is the part most critics do not want to talk about. Once you acknowledge human labor is still the heart of the process, you can no longer pretend AI is some unstoppable monster threatening all creativity.

You have to face the truth. The panic was about the narrative, not the technology.

Rhetoric About “AI Slop” Sets Workers Up to Be Exploited

Here is where the fear becomes dangerous.

Imagine your boss tells you to use AI because it speeds up part of your workflow. You spend hours shaping the final result, polishing details, rewriting rough parts, and adding your voice. You still did the work. You still used your creativity. You still used your judgment.

Now imagine your boss says they are going to pay you less because AI helped you.

How would that feel?

Or imagine someone calls your work “AI slop” even though you spent your entire afternoon fixing, revising, and refining it. Is that fair? Is that honest?

This is the real risk no one wants to acknowledge.

The more people repeat “AI slop,” the more companies can take advantage of it. If something can be dismissed as slop, it becomes easier to underpay the worker behind it. If something is assumed to be effortless because AI was involved, your labor becomes invisible.

This is how workers lose power. Not because AI took their job. Because public opinion gave corporations permission to undervalue them.

That is the precedent we are setting right now.

Watermarks Are Turning Into Another Problem No One’s Thinking About

I understand why major companies are watermarking AI images or audio. They want provenance. They want oversight. They want accountability.

Here is the issue. Everywhere else, watermarks imply ownership.

If the public sees a watermark on a piece of work, they assume one thing. The entity behind the watermark owns it. So what happens when AI companies watermark content heavily edited by human artists and writers? What happens when that watermark stays attached to something a human spent hours shaping?

Suddenly the line between authorship and assistance gets blurry. A watermark can send the wrong social signal to the public. And to employers.

If someone mistakenly believes the AI owns the work, or that the AI did most of the work, the person who shaped it becomes invisible again. That creates a new opportunity for companies to undervalue human labor.

This is not a small issue. This is the kind of policy mistake that could take years to correct.

The US Is Falling Behind Because People Do Not Want to Learn

Other countries are adopting AI at rapid speed, not because they’re realistic. They understand that refusing to use AI does not hurt the AI. It hurts the worker who refuses to adapt.

Meanwhile, in the US, a surprising number of people still respond to AI with blanket hostility. They reject the technology entirely. They refuse to even understand what it can do. They treat learning AI as a moral issue, not a practical one.

This is how a country falls behind.

It is not about who builds the most advanced model. Do not be swayed by that. It is about who builds the most capable workforce. It is about who can think critically about the future instead of clinging to nostalgia. It is about who can adjust quickly enough to stay competitive.

Right now, the US is not winning that race.

If that gap gets any wider, it will not matter how loud the anti AI rhetoric is. The jobs that remain will go to people who can work with the technology. Not people who refused to learn because they were told to be afraid.

Is the Public Being Manipulated Into Hating AI?

This question keeps coming up for me.

Do people genuinely hate AI, or have they been primed to hate it?

We are watching in real time as many citizens admit they fell for political propaganda and misinformation in other areas of their lives. Why would AI be the one topic that somehow escaped manipulation?

Some of the hostility toward AI feels too coordinated. Too repetitive. Too emotional. Too extreme. Almost like people are being nudged into a position they never examined for themselves.

Meanwhile, the usage numbers keep climbing.

If AI were truly despised, millions of people would not use it every day. Students would not depend on it. Workers would not rely on it. Creatives would not experiment with it. Developers would not build on top of it. The contradiction is right there. People do not hate AI. They hate the idea of being judged for using it.

That is the part no one wants to say out loud.

People need to stop reacting to AI based on fear, panic, and propaganda. They need to think for themselves. They need to look ahead and be honest about where the world is going. AI will be integrated into most careers one way or another. That is not a threat. That is reality.

If people refuse to see that, they will be making themselves easier to replace, easier to underpay, and easier to ignore.

It is time to learn the technology. It is time to protect your labor. It is time to think critically instead of emotionally.The future is coming whether people fear it or not. The only question left: who chooses to face it?