At what point do we lose the ability to tell if something is real? That’s the question some are asking thanks to OpenAI’s video generator, Sora. It’s gotten so advanced that even deepfake experts are struggling to tell whether the clips they’re looking at were generated by AI or not.

The Vanishing Visual Cues

It used to be easy to tell whether a video was made with AI. Too many fingers, skin that looked plastic or had odd coloring. Earlier models would stumble over basic physics. The end result would give you bodies with strange proportions or shadows that didn’t make sense. Those errors were our last defense. Little reminders that the image in front of us wasn’t real.

Sora wipes those away. It understands motion, texture, and dimension so well that the glitches have all but vanished. The generated clips look like they could have been filmed on a real camera, under real lighting, with real people. That’s what makes it so dangerous.

The Illusion of Confidence

The average person scrolling through social media isn’t a deepfakes expert. They’re not pausing to question whether a clip is AI-generated or real. If it looks convincing enough, they’ll accept it as fact. Most of the time, Sora’s videos are convincing enough to pass as real.

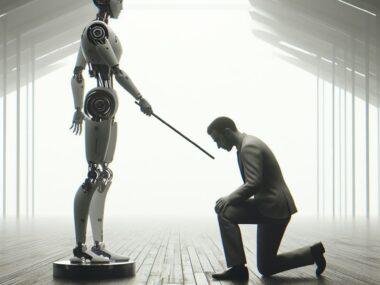

People are used to trusting what they see. In this new reality, that instinct becomes a liability. When deepfakes reach the point where they look too realistic, “seeing is believing” becomes a trap.

There are technical markers that are supposed to distinguish these clips as being made by AI. Sora includes digital metadata that can verify whether a video was AI-generated.

Yet, these markers don’t mean much when most platforms make them too obscure for users to notice. Or when users decide to remove them. If the metadata isn’t visible, then there’s no point in adding it to the videos. The burden still falls on the user to recognize when something is off.

Misinformation, Reinvented

The implications go far beyond fake news. Deepfakes are already being used for harassment, political manipulation, and revenge. Tools like Sora push that threat into a new phase. Anyone can create footage so believable they can destroy a person’s reputation and relationships.

Imagine a video showing someone saying a racial epithet. You have no way to prove it’s fabricated. Now scale that up to millions of users, each generating and sharing ultra-realistic clips.

Sora’s evolution marks a turning point. The clues that used to help us spot deepfakes are fading fast. It’s not just that AI can generate convincing videos. People are losing one of the few methods we had to detect them. The line between fiction and reality is starting to dissolve rather quickly.